Third-party Services Nightmares - Azure App Service

This article is part of a collection of articles where I share real-life professional experiences where using third-party services to offload specific functionalities went terribly wrong... when a tailor-made approach would probably have prevented such a situation.

The Project

In 2021, a major research institute asked us to develop a microsite. This microsite showcased the images generated by one of the artificial intelligence models developed by the researchers.

It was set from the beginning that the project would reach an international audience, so the infrastructure and technologies needed to be well suited to this.

The microsite was primarily client-side: it showcased information and then allowed users to select a location. A request to the API then queued the location, and would then send back a rendering from the AI model (custom-generated based on their selections).

The AI model and its infrastructure (queueing, inference, etc.) was outside our scope of work.

Infrastructure and Technological Choices

We opted for a Node.js application with caching for the rendered pages (HTML/JS/CSS). This simple, unidirectional web server was complemented by API endpoints for back-end functionalities. This primarily involved the following tasks and the related operations:

- Adding the user (and their home address) to the queue (query to an external RabbitMQ).

- Adding the user (and their email address) to the post-dated email mailing list (query to an external MongoDB).

Since it was a non-profit project, and was focused climate change, Microsoft generously offered Azure platform credits for the project. We therefore developed the infrastructure around their products. Choosing App Service as the microsite platform was a natural fit:

- Out-of-box handling of CI/CD from GitHub.

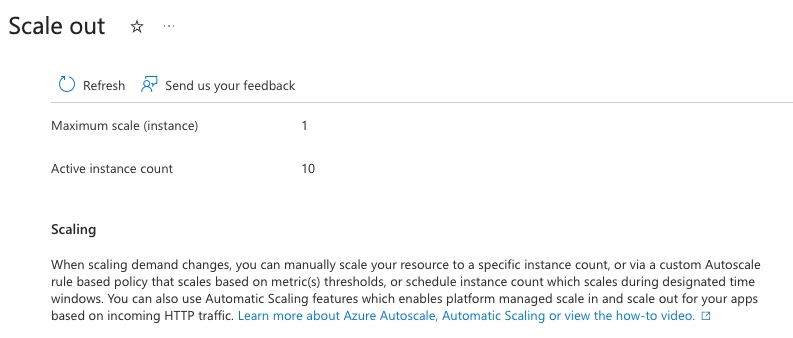

- Out-of-box handling of autoscaling.

- Out-of-box handling of OS, Node.js, and security updates.

- Out-of-box handling SSL certificate and domain management.

As recommended, we have implemented a Health endpoint (/health) enabling Azure services to automatically optimize scaling.

Scaling tests with small groups were successfully conducted a few weeks before the launch.

What is an App Service Platform (PaSS)?

App Services are services that allow you to create, deploy, and scale web applications, backends, and RESTful APIs. They allow developers to focus on writing code and building applications without having to manage the underlying infrastructure, such as servers, operating systems, and network equipment.

Here are the main App Service providers at the time of writing:

The Problem

The site had been soft-launched successfully for a few days, without having been widely shared. Then, major media outlets around the world shared the article. In an instant, traffic jumped from 10 to 200,000 visits per hour, without us being able to accurately predict the impact of these articles.

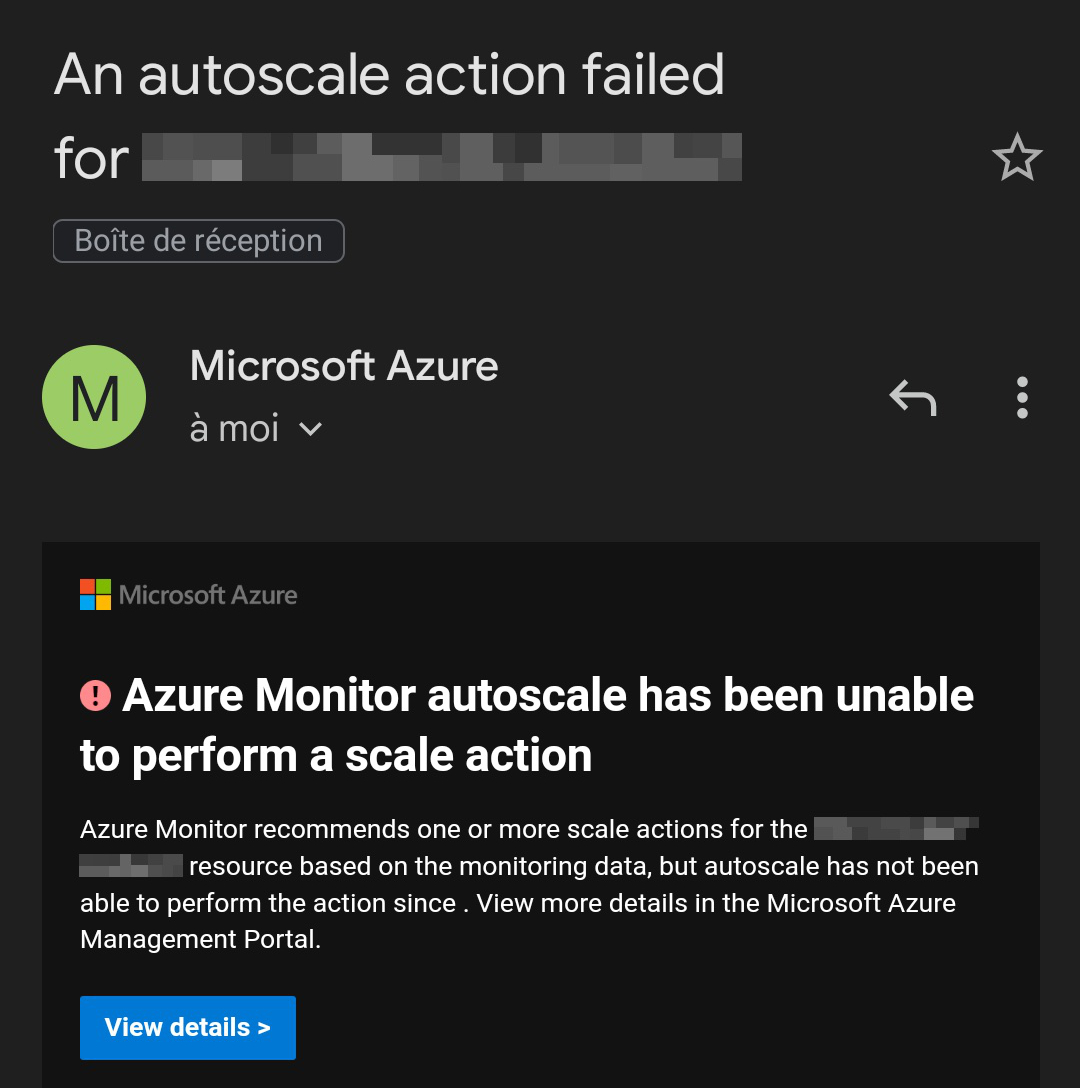

In theory, the automatic scaling that is the whole point of these types of platforms should have detected the surge in traffic in less than 5 minutes and triggered a horizontal scaling operation. However, this is not what happened. Instead, we received this email:

In the Azure logs, the following message was triggered with each scaling attempt:

Failed to update configuration for 'ASP-xyz'.

This region has quota of 0 PremiumV2 instances for your subscription.

Try selecting different region or SKU.

As unbelievable as it can seem, the data center we were using had run out of the selected type of VMs... so it prevented horizontal scaling, as it required more of them. Therefore, there was only one web server to handle all the traffic.

App Service platforms assume it's our responsibility to ensure that enough of the selected types of VMs are available in our region.

What are the solutions?

VM availability is a sneaky problem inherent to cloud architecture. Often portrayed as intangible, it's not natural to think that a featured VM type might suddenly become unavailable in sufficient quantities (or completely unavailable overnight). We received no notification of this unavailability.

First, it should be noted that it would have been wise from our team to increase the minimum number of VMs before the press release period. A more aggressive use of a CDN would also have eased the load on the Node.js application. In either case, this wouldn't have solved the automatic scaling problem, but it would have at least mitigated it.

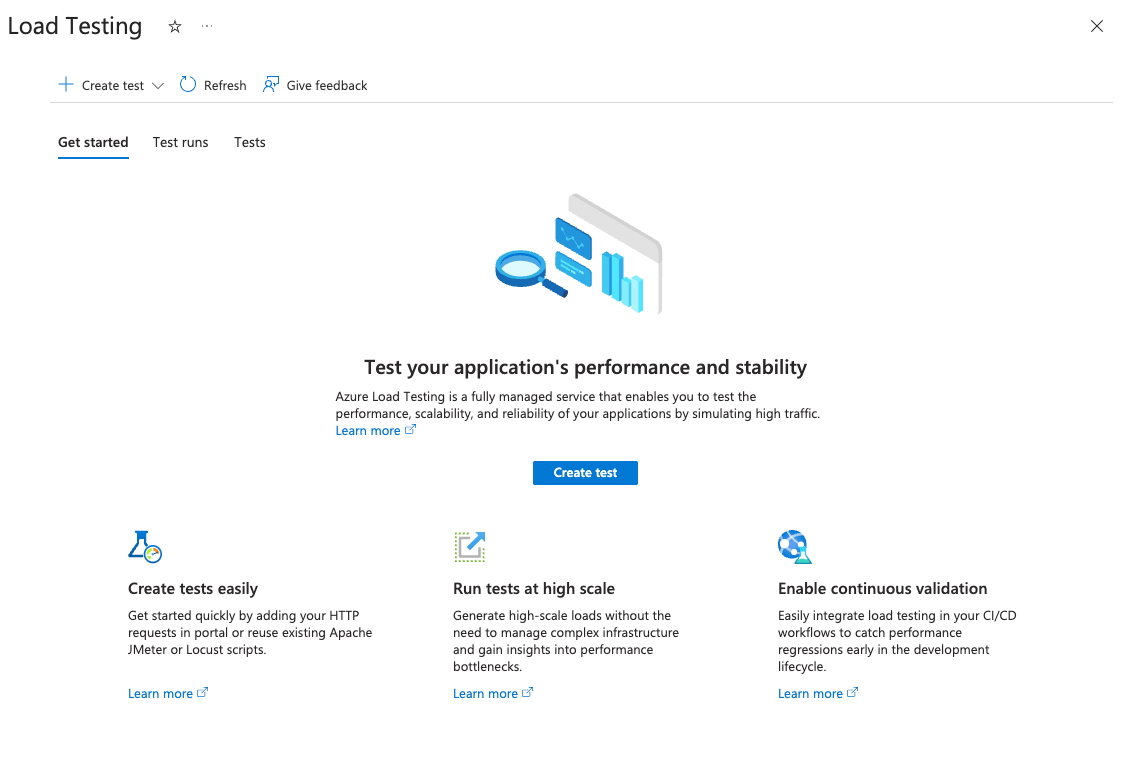

But most importantly, it would have been wise to conduct more thorough stress tests a few days before the launch. This would have triggered the scaling process and perhaps highlighted the lack of VMs of the selected type in the chosen region (data center). This is also applicable to all projects that use scaling functions, regardless of the cloud provider.

Moreover, Microsoft Azure's service offerings have greatly improved in this regard since the project was released. A load testing tool is now available directly within Azure App Service. This is an essential and highly valuable tool before any production deployment with any App Service platform.

Third-Party Service Nightmares

The non-intuitive limitations of a third-party service cause many nightmares. They're often documented, but not highlighted. So, we can hope to read the section of documentation that mentions them and understand all the implications… which is more a matter of luck than professionalism. And since it's a limitation inherent to the third-party service design (and not to the technology involved), we can't predict or already know them.

Sometimes, the flexibility and understanding of a tailored approach are unparalleled. Third-party services aren't magic.